We Trained C-Suite on AI. Here’s What Actually Happened.

They did not saved $8M published. But around $3M. Still nice. Do not believe PR about AI should be the actual article name:)

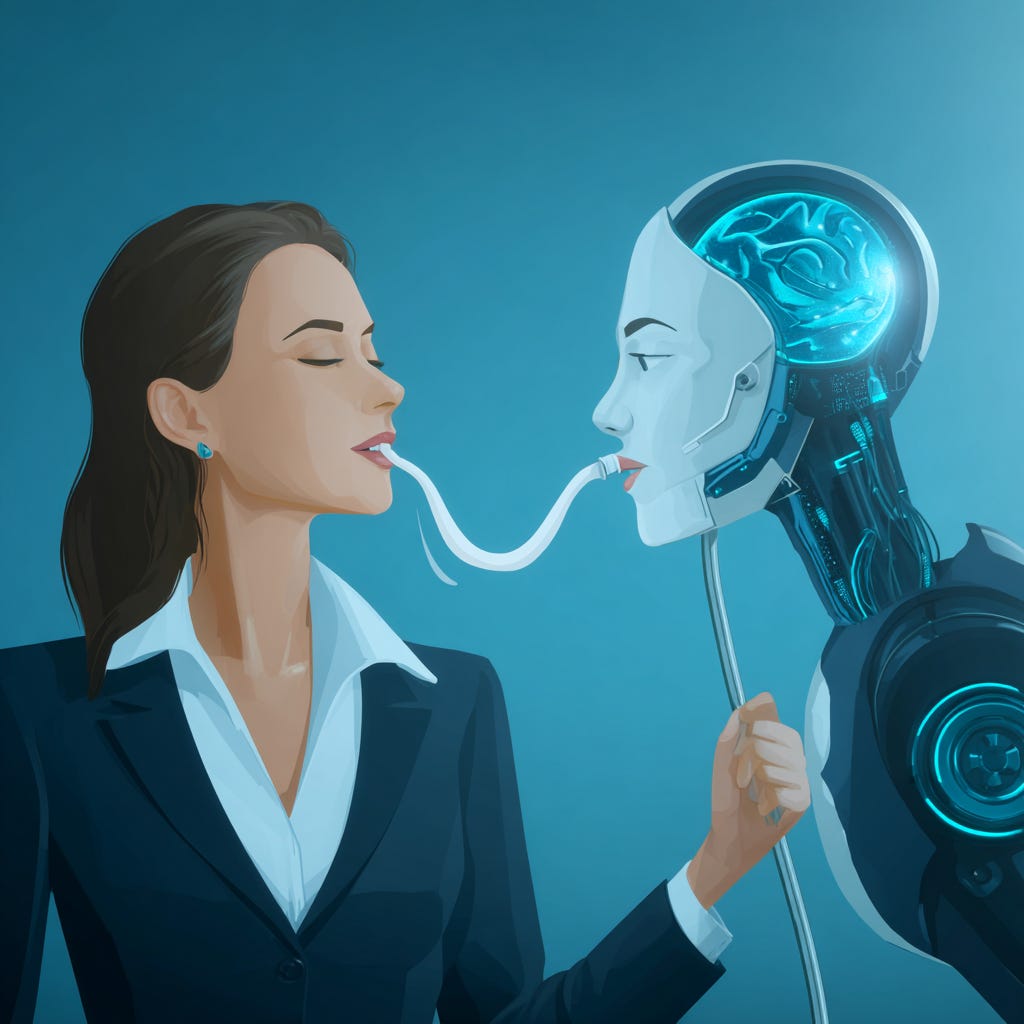

Let me be blunt: nobody told us the hardest part of executive AI transformation wasn’t the technology.

It was getting a CFO to trust an AI agent handling her financial analysis.

We built the AI Executive Transformation Program on paper.

Twelve C-level executives, one-day Prague workshop, 90-day advisory engagement, $50,000 price tag.

The framework promis…